Connecting Machine Learning with SVG: Working with BlazePose

Introduction

Lately I've had the good fortune to work on a passion project of mine: creating an SVG artworks framework alongside a collaborative of artists interested in Scalable Vector Graphics. While I haven't officially launched the framework yet I've been writing more lately about SVG. This post will continue the trend but tie in another of my passions; namely machine-learning.

In particular, this is the first in a series posts I'm working on exploring an application of machine vision to the creation of SVG artworks. The application involves pose estimation. Several years ago I started working with the tensorflow ecosystem on projects revolving around machine learning and computer vision. Around that time Google released a pre-trained model for pose estimation designed for WWW development using tensorflow.js; namely PoseNet.

PoseNet enables pose estimation using input from images, videos and devices like web-cams. With PoseNet, engineers can create applications that enable a system to "see" figures identifiable by key features related to pose -- like elbows, knees, wrists, hips, etc.. Since its release, PoseNet has been applied to many applications including gaming, interactive fitness, gesture recognition, movement analysis, sports science, physical therapy ... the list goes on. Given my long-standing interests in machine learning, sports, kung fu animation, etc., it's no surprise that I landed on pose estimation as my next avenue of exploration.

Setting up to PoseNet

My initial plan was to engage in a quick spike to explore recent developments and understand what it would take to integrate PoseNet models with my framework. Always the optimist, I figured I'd hammer out some proofs and have a new ml module up in short order. But, as always, the devil's in the details and I encountered enough gotcha's that I figured an initial post on getting started was warranted -- if for no other reasons than to help others with similar interests and document aspects of the SVG artworks framework as its development unfolds.

The Reports of its Demise are Greatly Exaggerated

The first question that came up for me was tensorflow.js? Is that still a thing? Now, I've had extensive experience with tensorflow.js -- so I feel I have a right to be wary 😅 . Again, back when I jumped into architecting and building machine-learning applications tensorflow was the most well-supported and most widely adopted system available for applied ML. And with tensorflow.js (tensorflow with javascript bindings) it was pretty much the only thing around for doing ML on networked (WWW) applications. But, more recently, newer and purportedly more usable systems (namely PyTorch) have come into favor (especially among the python crowd) and I hear a lot of "oh, no-one uses tensorflow anymore". But, to be true, I feel the reports of it's demise are greatly exaggerated. Tensorflow continues to boast a large community of support. Many pre-trained models are available and tested on Tensorflow -- not the least being PoseNet and it's cousins. So it is with confidence that I'm once again adopting tensorflow.js -- at present for integration with my SVG Artworks framework.

Setting up a Build System with Vite

My next problem was setting up a build system. Whenever I have the luxury of engaging in solo engineering I always attempt to be platform agnostic. I mention that here because I'm working primarily in pure vanilla javascript and haven't committed to any particular opinionated framework or build system. But with the need to once again incorporate tensorflow and associated models into my applications, and eventually to invite potential collaborators to contribute to the system, it's high time to look a build tooling.

At this point in time, my requirements are simple enough. I need a tool that will quickly and easily generate a minified bundle containing all and only the dependencies I need for particular posts and demos. Lean and mean. And for now, that turns out to be Vite. From its own website; "Vite makes web development simple again". Vite accelerates my process by enabling me to quickly and easily create highly optimized static assets leveraging native ES Modules for blogging, application development and demos. For development I get seamless hot swaps and reloads with its built-in server.

Procedure

Getting started with Vite was a straightforward formula:

-

Create the Vite project (I choose plain vanilla)

npm create vite@latest <> --template vanilla -

cdto the newly created project root directory and run:npm install(Vite provides a pre-defined package.json for a vanilla project). -

Install the required tensorflow libraries.

npm install @tensorflow/tfjs @tensorflow-models/pose-detection

With this simple recipe I was good to go. The tensorflow installations went smooth and I ended up with a self-contained project development environment.

Modularity with Javascript

I should mention that I often work in python where I leverage conda to avoid versioning and environment clashes. But javascript's NPM is a different story. Using Vite and local npm installs I have everything I need neatly bundled in a root development directory that looks like this:

./project_root |-- dist |-- index.html |-- node_modules |-- package.json |-- package-lock.json |-- public |-- src

This structure very simple and a typical starting point for web-apps. The important thing to note for purposes of getting started is the dist directory. Vite provides a build routine that you can execute with:

$ npm run build

What I love about it is that it will generate a dist directory which will contain your HTML index alongside an assets directory. The assets directory contains a minified javascript bundle containing all and only the ES modules required for your application which you can drop anywhere as needed (for example that's exactly what I did for this blog).

/dist |-- assets | -- index-###.js |-- index.html

And that's it -- that's all there is to it. What I love about this is it's a very modular solution (for javascript anyway) and for my current purposes it suits my needs perfectly! So with the setup out of the way we can develop some good stuff!

Pose Estimation with a View toward SVG

Using BlazePose

In introducing this post I made reference to PoseNet -- an open source model due to Google which was released several years back. Since it's release, however, significant advances have been made in pose detection and we've seen the release of several new models supporting applied ML. So for my purposes on evaluating the current state of the art I landed on adopting BlazePose for several reasons:

-

BlazePose is a new addition to the family of pose estimation models trained on COCO

-

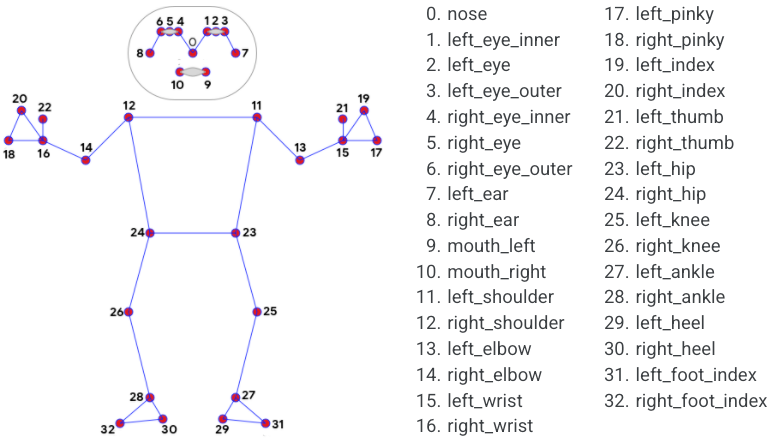

It has greater topological resolution than MoveNet (a PoseNet derivative) with 33 as opposed to 17 keypoints

-

It runs on tensorflow.js with proven performance (30-60 FPS on mobile devices).

BlazePose works really well on individuals. It uses a two stage architecture:

-

Defining a region of interested on frame 1 input, and

-

Carrying on with prediction on 33 topological keypoints.

Figure 1. Blaze pose full body landmarks.

Coding BlazePose

Having set up an initial project as I described above, coding to BlazePose was straightforward. Here's a recipe using vanilla ES6 modules.

-

Import the libraries:

import * as poseDetection from '@tensorflow-models/pose-detection'; import * as tf from '@tensorflow/tfjs'; import '@tensorflow/tfjs-backend-webgl';

-

Create a detector:

// INITIALIZE POSE DETECTION const model = poseDetection.SupportedModels.BlazePose; const detectorConfig = { runtime: 'mediapipe', enableSmoothing: true, modelType: 'full', solutionPath: 'https://cdn.jsdelivr.net/npm/@mediapipe/pose', }; PoseLoopController.poseDetector = await poseDetection.createDetector( model, detectorConfig ); -

That's it! Once you have your detector you can kick off pose estimation.

const poseData = await this.poseDetector.estimatePoses( this.video, this.poseEstimationConfig, timeStamp );

Pain Point

Full disclosure -- one of the main reasons I'm writing this is I did have somewhat of a pain point conducting my initial spike with BlazePose. While the Google site says you should be able to use the tfjs runtime the only way I could get BlazePose to work was using mediapipe. I've called out the relevant lines in the detectorConfig above.

Input

For my initial foray I'm interested in webcam input for pose estimation in real-time. Web browsers provide an API for webcam access enabling client code to set up streams. The following fragment shows a very basic setup routine to get laptop camera access:

let video = document.getElementById('video');

// START THE CAMERA ...

const stream = await navigator.mediaDevices.getUserMedia({

video: { width: 640, height: 480 },

audio: false

});

video.srcObject = stream;

/*

* Promise to wait 'till camera is ready...

*/

await new Promise( (resolve) => {

video.onloadedmetadata = () => {

video.play();

PoseLoopController.video = video;

resolve();

};

} );

The code above presupposes a video element embedded in the HTML client to the script:

<video id="video" autoplay playsinline muted></video>

Connecting the Dots with SVG

Finally, with all the infrastructure in place, the stage is set to connect the dots. When you estimate with BlazePose you get a JSON formatted set of keypoints representing skeletal features as shown in Figure 1 above. I've included a specimen in Appendix 1 for anyone who wants to look at the format. So for this first iteration, I just wanted to connect the dots with SVG.

To this end, I set up an SVG element in my HTML:

<svg id="svg_root"

width="640px"

height="480px"

viewbox="0 0 640 480"></svg>

Elsewhere, in my javascript module ...

init( svgElement ) {

this.svgRoot = svgElement;

},

Working off the pose data we can use SVG to; (1) draw the joints, and (2) set up a mapping to create a sort of stick person, or, "skeleton". Here's the code to draw the point data in SVG:

// DRAW THE JOINTS

for( const point of keypoints ) {

// skip low-confidence points

if (point.score < 0.5) continue;

const circle = document.createElementNS("http://www.w3.org/2000/svg", "circle");

circle.setAttribute("cx", point.x);

circle.setAttribute("cy", point.y);

circle.setAttribute("r", "5");

circle.setAttribute("fill", "lime");

this.svgRoot.appendChild(circle);

}

Notice how we skip drawing the low confidence estimates. That is, if you look at the data format the BlazePose model provides a confidence score for each estimate. Since low confidence esimates reflect a greater probability of error I just skip drawing them for now.

The next thing we need to render is a mapping for the BlazePose topology:

SKELETON_CONNECTIONS: [

['left_shoulder', 'right_shoulder'],

['left_shoulder', 'left_elbow'],

['left_elbow', 'left_wrist'],

['right_shoulder', 'right_elbow'],

['right_elbow', 'right_wrist'],

['left_shoulder', 'left_hip'],

['right_shoulder', 'right_hip'],

['left_hip', 'right_hip'],

['left_hip', 'left_knee'],

['left_knee', 'left_ankle'],

['right_hip', 'right_knee'],

['right_knee', 'right_ankle'],

['nose', 'left_eye'],

['nose', 'right_eye'],

['left_eye', 'left_ear'],

['right_eye', 'right_ear']

]

Armed with the mapping we can connect the dots:

const keypointMap = {};

for (const kp of keypoints) {

keypointMap[kp.name] = kp;

}

for (const [p1Name, p2Name] of this.SKELETON_CONNECTIONS) {

const p1 = keypointMap[p1Name];

const p2 = keypointMap[p2Name];

if (!p1 || !p2 || p1.score < 0.5 || p2.score < 0.5) continue;

const line = document.createElementNS("http://www.w3.org/2000/svg", "line");

line.setAttribute("x1", p1.x);

line.setAttribute("y1", p1.y);

line.setAttribute("x2", p2.x);

line.setAttribute("y2", p2.y);

line.setAttribute("stroke", "cyan");

line.setAttribute("stroke-width", "2");

this.svgRoot.appendChild(line);

}

And there we have it. A render method for the pose data returned by the BlazePose model.

As a final note -- to sample in real time -- I set up a controller using javascript's ubiquitous requestAnimationFrame:

/**

* classic controller logic to mediate between

* model and view...

*/

const PoseLoopController = {

...

updateEstimates: async function ( timeStamp ) {

const poseData = await this.poseDetector.estimatePoses(

this.video,

this.poseEstimationConfig,

timeStamp

);

this.renderPose( poseData );

this.estimateRafId = requestAnimationFrame(

this.updateEstimates.bind(this)

);

},

renderPose: function( data ) {

this.poseView.renderPose(data);

},

...

};

Result

BlazePose Demo Page

WebCam

SVG

Discussion

I find the results of this initial iteration encouraging. Contrary to what I've been hearing I found tensorflow.js to be quite usable and readily integrated with my existing (albeit minimal!) infrastructure. I've used tensorflow extensively in the past for model development and training and remain a fan. Admittedly, I haven't applied tensorflow to develop models with attention -- yet -- but I find results like this encouraging when that time comes.

Regarding BlazePose itself the results of this spike speak volumes. Easy to use (relatively speaking), highly accurate and quite performant. Future efforts will help me assess it's readiness for application to the SVG Artworks initiative.

Summary

Once again, this post is the first in a two part series on leveraging pose estimation for SVG Artworks. This part covered setting up the infrastructure toward integration. In this post I showed:

-

How and why I committed to BlazePose for pose estimation,

-

The basic recipe to sample data in real time using the mediapipe runtime, and

-

How to render pose-data using SVG.

In the next part of this series I'll explore the first of many applications for this sub-system.

Appendix 1: BlazePose Estimate Format

This appendix shows the data format for BlazePose estimates.

export const testData = [

{

"keypoints": [

{

"x": 356.4840316772461,

"y": 250.0550651550293,

"z": -1.1119136810302734,

"score": 0.9996199607849121,

"name": "nose"

},

{

"x": 377.94788360595703,

"y": 223.2425880432129,

"z": -1.0387614965438843,

"score": 0.9989515542984009,

"name": "left_eye_inner"

},

{

"x": 392.9246520996094,

"y": 224.65370178222656,

"z": -1.0387614965438843,

"score": 0.9992958307266235,

"name": "left_eye"

},

{

"x": 404.59362030029297,

"y": 226.76160335540771,

"z": -1.0387614965438843,

"score": 0.9989797472953796,

"name": "left_eye_outer"

},

{

"x": 333.9323425292969,

"y": 225.13166427612305,

"z": -1.0371358394622803,

"score": 0.9990264177322388,

"name": "right_eye_inner"

},

{

"x": 320.34534454345703,

"y": 227.19446182250977,

"z": -1.036323070526123,

"score": 0.9993976354598999,

"name": "right_eye"

},

{

"x": 309.02246475219727,

"y": 230.1283836364746,

"z": -1.0371358394622803,

"score": 0.9992387294769287,

"name": "right_eye_outer"

},

{

"x": 431.91295623779297,

"y": 259.8684883117676,

"z": -0.5970033407211304,

"score": 0.9990710020065308,

"name": "left_ear"

},

{

"x": 300.7639694213867,

"y": 261.9875907897949,

"z": -0.5592080354690552,

"score": 0.9994493126869202,

"name": "right_ear"

},

{

"x": 391.6098403930664,

"y": 296.5780448913574,

"z": -0.9477275609970093,

"score": 0.9995063543319702,

"name": "mouth_left"

},

{

"x": 332.71968841552734,

"y": 299.16006088256836,

"z": -0.9379739761352539,

"score": 0.9995487332344055,

"name": "mouth_right"

},

{

"x": 552.4941253662109,

"y": 466.1836624145508,

"z": -0.3050040900707245,

"score": 0.9921233057975769,

"name": "left_shoulder"

},

{

"x": 209.28165435791016,

"y": 471.54327392578125,

"z": -0.41778045892715454,

"score": 0.9973989725112915,

"name": "right_shoulder"

},

{

"x": 673.1623840332031,

"y": 670.8623313903809,

"z": -0.3901451528072357,

"score": 0.06075190007686615,

"name": "left_elbow"

},

{

"x": 131.7729949951172,

"y": 701.9555854797363,

"z": -0.35966506600379944,

"score": 0.14977993071079254,

"name": "right_elbow"

},

{

"x": 641.864013671875,

"y": 856.4664459228516,

"z": -0.8883930444717407,

"score": 0.01822929084300995,

"name": "left_wrist"

},

{

"x": 135.20204544067383,

"y": 863.7027740478516,

"z": -0.9127771258354187,

"score": 0.05802474543452263,

"name": "right_wrist"

},

{

"x": 659.3319702148438,

"y": 923.6346817016602,

"z": -1.0290077924728394,

"score": 0.02822098881006241,

"name": "left_pinky"

},

{

"x": 115.05788803100586,

"y": 925.6657218933105,

"z": -1.0728992223739624,

"score": 0.07850097864866257,

"name": "right_pinky"

},

{

"x": 610.3805541992188,

"y": 922.3855018615723,

"z": -1.0875296592712402,

"score": 0.050517670810222626,

"name": "left_index"

},

{

"x": 148.2944679260254,

"y": 923.1860733032227,

"z": -1.179376244544983,

"score": 0.1364932507276535,

"name": "right_index"

},

{

"x": 598.1359100341797,

"y": 893.5819244384766,

"z": -0.9404124021530151,

"score": 0.0497734472155571,

"name": "left_thumb"

},

{

"x": 161.11021041870117,

"y": 897.3326683044434,

"z": -0.9810525178909302,

"score": 0.12678517401218414,

"name": "right_thumb"

},

{

"x": 499.7105026245117,

"y": 909.8320770263672,

"z": -0.03601730614900589,

"score": 0.00015843621804378927,

"name": "left_hip"

},

{

"x": 261.3803482055664,

"y": 910.173511505127,

"z": 0.04259592667222023,

"score": 0.00016997529019135982,

"name": "right_hip"

},

{

"x": 493.2014465332031,

"y": 1276.8883895874023,

"z": -0.23429028689861298,

"score": 0.00018667821132112294,

"name": "left_knee"

},

{

"x": 269.8649024963379,

"y": 1273.6931991577148,

"z": -0.1004318967461586,

"score": 0.0000660521473037079,

"name": "right_knee"

},

{

"x": 495.0320053100586,

"y": 1606.7665100097656,

"z": 0.3820171356201172,

"score": 0.00003852893496514298,

"name": "left_ankle"

},

{

"x": 273.22668075561523,

"y": 1602.488021850586,

"z": 0.29606327414512634,

"score": 0.0000035008542909054086,

"name": "right_ankle"

},

{

"x": 503.89259338378906,

"y": 1663.7647247314453,

"z": 0.39949238300323486,

"score": 0.0000321923362207599,

"name": "left_heel"

},

{

"x": 269.63619232177734,

"y": 1660.8712005615234,

"z": 0.3068329095840454,

"score": 0.000008332717698067427,

"name": "right_heel"

},

{

"x": 451.91650390625,

"y": 1703.7100982666016,

"z": -0.36129066348075867,

"score": 0.000050644717703107744,

"name": "left_foot_index"

},

{

"x": 307.6192092895508,

"y": 1698.3306884765625,

"z": -0.47386378049850464,

"score": 0.00003734356869244948,

"name": "right_foot_index"

}

],

"keypoints3D": [

{

"x": -0.01939723640680313,

"y": -0.59392911195755,

"z": -0.269775390625,

"score": 0.9996199607849121,

"name": "nose"

},

{

"x": -0.012170777656137943,

"y": -0.6322293281555176,

"z": -0.256103515625,

"score": 0.9989515542984009,

"name": "left_eye_inner"

},

{

"x": -0.011749839410185814,

"y": -0.6337063312530518,

"z": -0.256103515625,

"score": 0.9992958307266235,

"name": "left_eye"

},

{

"x": -0.011679437011480331,

"y": -0.6341966986656189,

"z": -0.256103515625,

"score": 0.9989797472953796,

"name": "left_eye_outer"

},

{

"x": -0.04084436595439911,

"y": -0.6245983839035034,

"z": -0.251953125,

"score": 0.9990264177322388,

"name": "right_eye_inner"

},

{

"x": -0.04001894220709801,

"y": -0.6251096129417419,

"z": -0.254150390625,

"score": 0.9993976354598999,

"name": "right_eye"

},

{

"x": -0.04083907604217529,

"y": -0.626063883304596,

"z": -0.253173828125,

"score": 0.9992387294769287,

"name": "right_eye_outer"

},

{

"x": 0.04208122566342354,

"y": -0.617120623588562,

"z": -0.1627197265625,

"score": 0.9990710020065308,

"name": "left_ear"

},

{

"x": -0.09006303548812866,

"y": -0.5944186449050903,

"z": -0.1534423828125,

"score": 0.9994493126869202,

"name": "right_ear"

},

{

"x": 0.005040733143687248,

"y": -0.5721349120140076,

"z": -0.2391357421875,

"score": 0.9995063543319702,

"name": "mouth_left"

},

{

"x": -0.03399992361664772,

"y": -0.5603097677230835,

"z": -0.234375,

"score": 0.9995487332344055,

"name": "mouth_right"

},

{

"x": 0.16162842512130737,

"y": -0.4394470155239105,

"z": -0.0804443359375,

"score": 0.9921233057975769,

"name": "left_shoulder"

},

{

"x": -0.16945692896842957,

"y": -0.4227263629436493,

"z": -0.11651611328125,

"score": 0.9973989725112915,

"name": "right_shoulder"

},

{

"x": 0.26471081376075745,

"y": -0.24886244535446167,

"z": -0.1248779296875,

"score": 0.06075190007686615,

"name": "left_elbow"

},

{

"x": -0.24077995121479034,

"y": -0.20644015073776245,

"z": -0.10650634765625,

"score": 0.14977993071079254,

"name": "right_elbow"

},

{

"x": 0.24441960453987122,

"y": -0.07281969487667084,

"z": -0.2763671875,

"score": 0.01822929084300995,

"name": "left_wrist"

},

{

"x": -0.24561065435409546,

"y": -0.07191699743270874,

"z": -0.2130126953125,

"score": 0.05802474543452263,

"name": "right_wrist"

},

{

"x": 0.23677894473075867,

"y": -0.00020848028361797333,

"z": -0.30419921875,

"score": 0.02822098881006241,

"name": "left_pinky"

},

{

"x": -0.2482885867357254,

"y": -0.014158015139400959,

"z": -0.22705078125,

"score": 0.07850097864866257,

"name": "right_pinky"

},

{

"x": 0.19907549023628235,

"y": -0.021193398162722588,

"z": -0.328369140625,

"score": 0.050517670810222626,

"name": "left_index"

},

{

"x": -0.20775923132896423,

"y": -0.027901863679289818,

"z": -0.251953125,

"score": 0.1364932507276535,

"name": "right_index"

},

{

"x": 0.2194294035434723,

"y": -0.0668170154094696,

"z": -0.285400390625,

"score": 0.0497734472155571,

"name": "left_thumb"

},

{

"x": -0.22554269433021545,

"y": -0.06160316243767738,

"z": -0.2288818359375,

"score": 0.12678517401218414,

"name": "right_thumb"

},

{

"x": 0.14885969460010529,

"y": 0.004388710018247366,

"z": 0.017364501953125,

"score": 0.00015843621804378927,

"name": "left_hip"

},

{

"x": -0.1501338928937912,

"y": -0.03282200172543526,

"z": -0.01105499267578125,

"score": 0.00016997529019135982,

"name": "right_hip"

},

{

"x": 0.11079777777194977,

"y": -0.11131850630044937,

"z": -0.1480712890625,

"score": 0.00018667821132112294,

"name": "left_knee"

},

{

"x": -0.05517786741256714,

"y": -0.4290594458580017,

"z": -0.240234375,

"score": 0.0000660521473037079,

"name": "right_knee"

},

{

"x": 0.18389558792114258,

"y": 0.167469322681427,

"z": -0.04730224609375,

"score": 0.00003852893496514298,

"name": "left_ankle"

},

{

"x": -0.042911700904369354,

"y": -0.0934547632932663,

"z": -0.173095703125,

"score": 0.0000035008542909054086,

"name": "right_ankle"

},

{

"x": 0.17212402820587158,

"y": 0.23642432689666748,

"z": 0.0014562606811523438,

"score": 0.0000321923362207599,

"name": "left_heel"

},

{

"x": -0.07328654080629349,

"y": -0.022688165307044983,

"z": -0.08013916015625,

"score": 0.000008332717698067427,

"name": "right_heel"

},

{

"x": 0.2948821187019348,

"y": -0.20878705382347107,

"z": -0.375732421875,

"score": 0.000050644717703107744,

"name": "left_foot_index"

},

{

"x": -0.06450705975294113,

"y": -0.3733605146408081,

"z": -0.48095703125,

"score": 0.00003734356869244948,

"name": "right_foot_index"

}

]

}

]